What is DeepSeek AI? (Features, OpenAI Comparison, & More)

Founded in 2023, DeepSeek began researching and developing new AI tools - specifically open-source large language models.

Fast-forward less than two years, and the company has quickly become a name to know in the space. Their AI models rival industry leaders like OpenAI and Google but at a fraction of the cost.

Within two weeks of the release of its first free chatbot app, the mobile app skyrocketed to the top of the app store charts in the United States.

The company's latest AI model also triggered a global tech selloff that wiped out nearly $1 trillion in market cap from companies like Nvidia, Oracle, and Meta.

With this impact in mind, here's a breakdown of everything you'll learn about DeepSeek in this post:

- What Is DeepSeek?

- How Many People Use DeepSeek?

- DeepSeek's Initial Growth

- DeepSeek Models & Release History

- DeepSeek Vs. OpenAI: Benchmarks & Performance

- DeepSeek-R1 Pricing & Costs

- How To Access DeepSeek

- Is DeepSeek Better Than ChatGPT?

What Is DeepSeek?

DeepSeek is a Chinese artificial intelligence startup that operates under High-Flyer, a quantitative hedge fund based in Hangzhou, China.

Liang Wenfeng is the founder and CEO of DeepSeek. He co-founded High-Flyer in 2016, which later became the sole backer of DeepSeek.

The company has developed a series of open-source models that rival some of the world's most advanced AI systems, including OpenAI’s ChatGPT, Anthropic’s Claude, and Google’s Gemini.

However, unlike many of its US competitors, DeepSeek is open-source and free to use.

It has also gained the attention of major media outlets because it claims to have been trained at a significantly lower cost of less than $6 million, compared to $100 million for OpenAI's GPT-4.

Some are referring to the DeepSeek release as a Sputnik moment for AI in America.

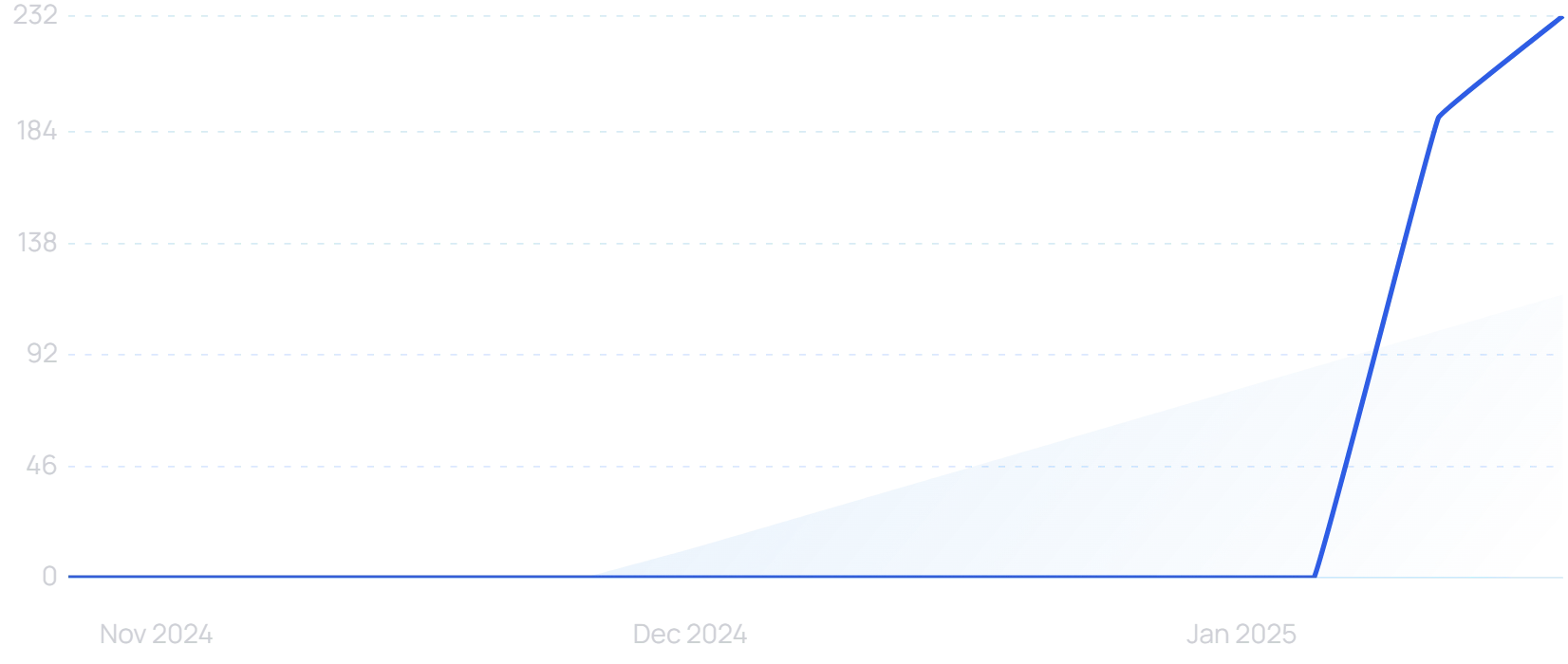

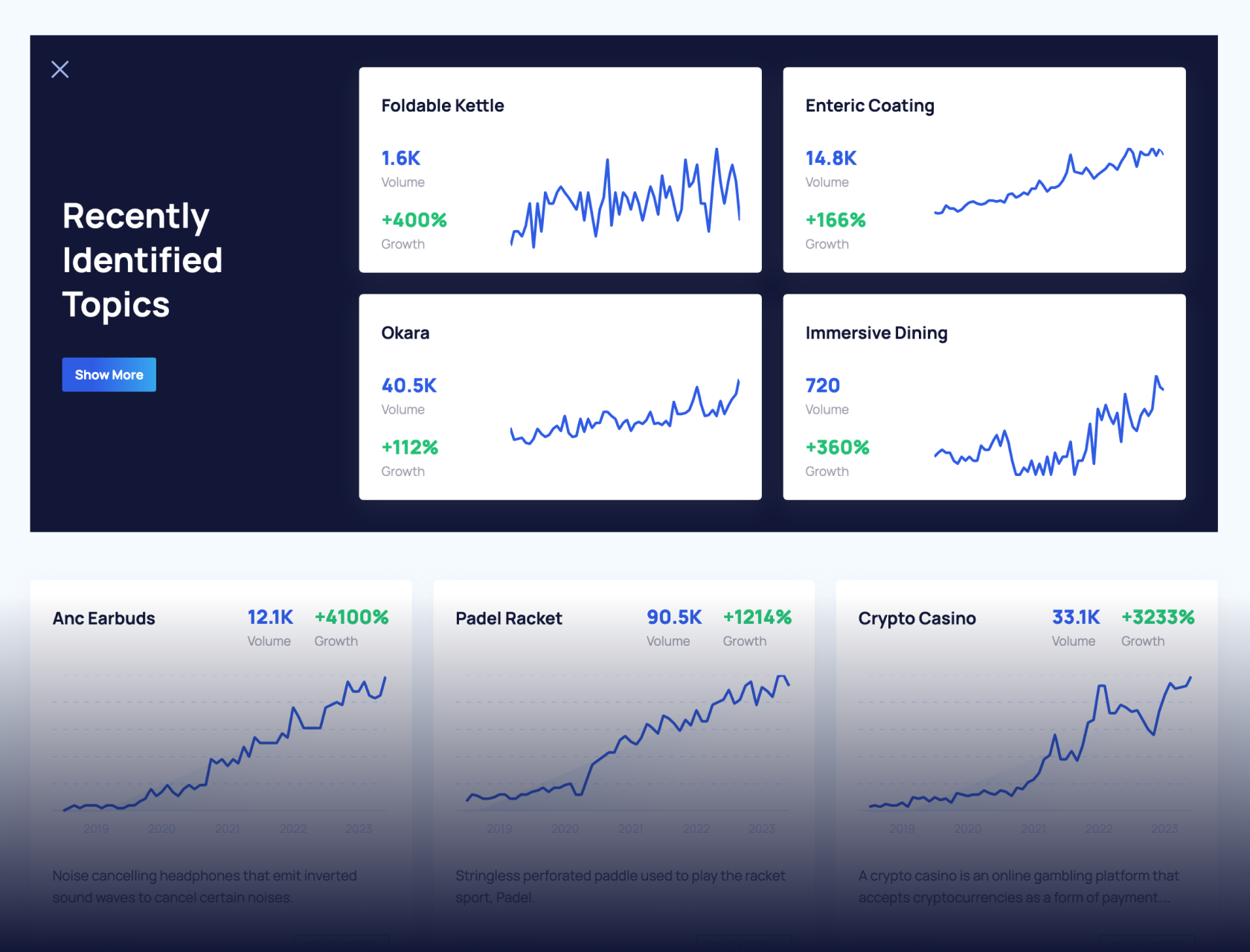

The tech world has certainly taken notice. According to data from Exploding Topics, interest in the Chinese AI company has increased by 99x in just the last three months due to the release of their latest model and chatbot app.

“DeepSeek AI” searches are up 99X+ in 3 months.

How Many People Use DeepSeek?

According to the latest data, DeepSeek supports more than 10 million users.

The app has been downloaded over 10 million times on the Google Play Store since its release.

HuggingFace reported that DeepSeek models have more than 5 million downloads on the platform. This includes the creation of 500+ derivative DeepSeek models.

It will be interesting to see how other AI chatbots adjust to DeepSeek’s open-source release and growing popularity, and whether the Chinese startup can continue growing at this rate.

Semrush reports that DeepSeek’s website traffic increased from 4.6 million monthly visits to 12.6 million between November and December 2024.

Want to Spy on Your Competition?

Explore competitors’ website traffic stats, discover growth points, and expand your market share.

DeepSeek's Initial Growth

When ChatGPT was released, it quickly acquired 1 million users in just 5 days. By day 40, ChatGPT was serving 10 million users.

DeepSeek, launched in January 2025, took a slightly different path to success. It reached its first million users in 14 days, nearly three times longer than ChatGPT.

However, DeepSeek's growth then accelerated dramatically. The platform hit the 10 million user mark in just 20 days - half the time it took ChatGPT to reach the same milestone.

Here’s a comparison of popular online services and how long it took them to reach the same 1 million and 10 million user mark:

| Online Service | Launch Year | Time Taken to Reach 1 Million Users | Time Taken to Reach 10 Million Users |

| DeepSeek | 2025 | 14 days | 20 days |

| ChatGPT | 2022 | 5 days | 40 days |

| Perplexity AI | 2022 | 2 months | 13 months |

| 2010 | 2.5 months | 355 days | |

| 2004 | 10 months | 852 days | |

| Threads | 2023 | 1 hour | 7 hours |

| 2006 | 2 years | 780 days | |

| Netflix | 1999 | 3.5 years | 9 years |

Shortly after the 10 million user mark, ChatGPT hit 100 million monthly active users in January 2023 (approximately 60 days after launch).

It will be interesting to see if DeepSeek can continue to grow at a similar rate over the next few months.

DeepSeek Models & Release History

Since the company was founded, they have developed a number of AI models. Their latest model, DeepSeek-R1, is open-source and considered the most advanced.

Here is a look at the release history of DeepSeek AI models:

| Model | Release Date | Parameters | Key Differentiators | Best For |

| DeepSeek Coder | November 2023 | 1B to 33B | Open-source, 87% code and 13% natural language training | Coding tasks, software development |

| DeepSeek LLM | December 2023 | 67B | General-purpose model, approached GPT-4 performance | Broad language understanding |

| DeepSeek-V2 | May 2024 | 236B total, 21B active | Multi-head Latent Attention (MLA), DeepSeekMoE architecture | Efficient inference, economical training |

| DeepSeek-Coder-V2 | July 2024 | 236B | 128,000 token context window, supports 338 programming languages | Complex coding challenges, mathematical reasoning |

| DeepSeek-V3 | December 2024 | 671B total, 37B active | Mixture-of-experts architecture, FP8 mixed precision training | Multi-domain language understanding, cost-effective performance |

| DeepSeek-R1 | January 2025 | 671B total, 37B active | Pure reinforcement learning approach, open-source | Advanced reasoning, problem-solving, competitive with OpenAI's o1 model |

DeepSeek Coder (November 2023)

DeepSeek Coder was the company's first AI model, designed for coding tasks.

It was trained on 87% code and 13% natural language, offering free open-source access for research and commercial use.

DeepSeek LLM (December 2023)

DeepSeek LLM was the company's first general-purpose large language model.

With 67 billion parameters, it approached GPT-4 level performance and demonstrated DeepSeek's ability to compete with established AI giants in broad language understanding.

DeepSeek-V2 (May 2024)

DeepSeek-V2 introduced innovative Multi-head Latent Attention and DeepSeekMoE architecture.

The model has 236 billion total parameters with 21 billion active, significantly improving inference efficiency and training economics.

DeepSeek-Coder-V2 (July 2024)

DeepSeek-Coder-V2 expanded the capabilities of the original coding model.

It featured 236 billion parameters, a 128,000 token context window, and support for 338 programming languages, to handle more complex coding tasks.

DeepSeek-V3 (December 2024)

DeepSeek-V3 marked a major milestone with 671 billion total parameters and 37 billion active.

The model incorporated advanced mixture-of-experts architecture and FP8 mixed precision training, setting new benchmarks in language understanding and cost-effective performance.

DeepSeek-R1 (January 2025)

DeepSeek-R1 is the company's latest model, focusing on advanced reasoning capabilities.

Trained using pure reinforcement learning, it competes with top models in complex problem-solving, particularly in mathematical reasoning.

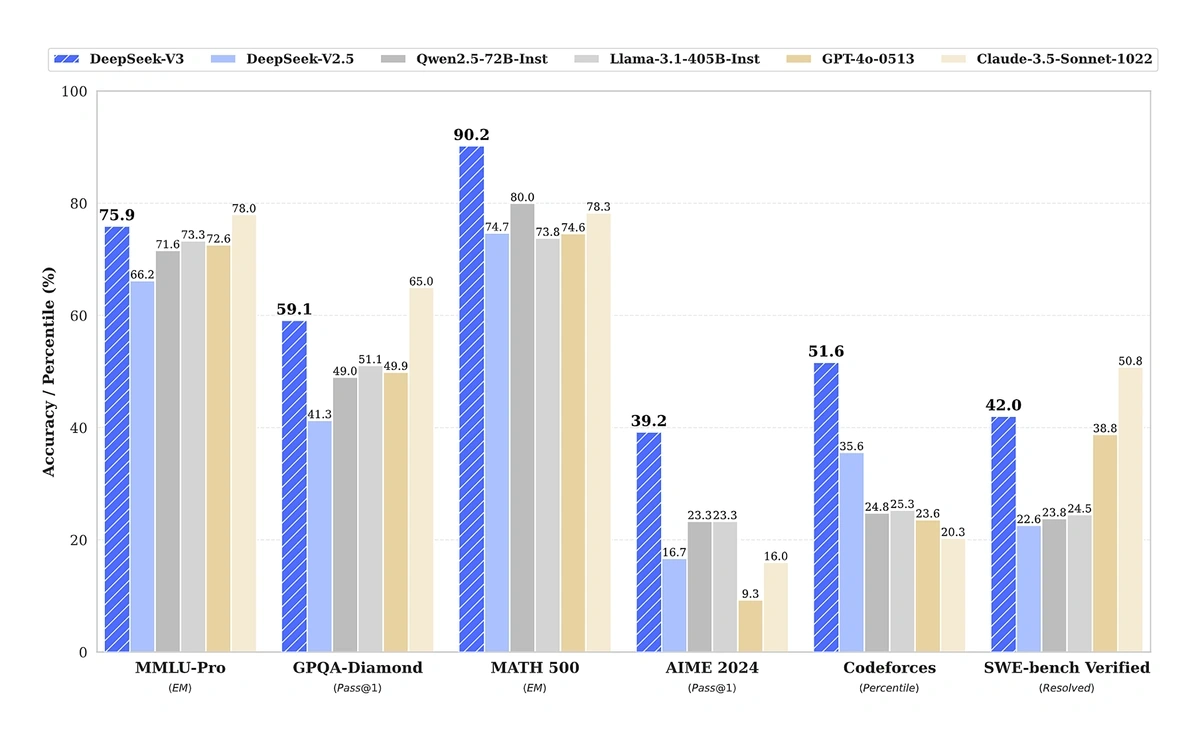

DeepSeek Vs. OpenAI: Benchmarks & Performance

OpenAI has been the undisputed leader in the AI race, but DeepSeek has recently stolen some of the spotlight.

Below, we highlight performance benchmarks for each model and show how they stack up against one another in key categories: mathematics, coding, and general knowledge.

Performance benchmarks of DeepSeek-RI and OpenAI-o1 models.

Mathematics Benchmarks

DeepSeek-R1 shows strong performance in mathematical reasoning tasks. In fact, it beats out OpenAI in both key benchmarks.

On AIME 2024, it scores 79.8%, slightly above OpenAI o1-1217's 79.2%. This evaluates advanced multistep mathematical reasoning.

For MATH-500, DeepSeek-R1 leads with 97.3%, compared to OpenAI o1-1217's 96.4%. This test covers diverse high-school-level mathematical problems requiring detailed reasoning.

Coding Benchmarks

Both models demonstrate strong coding capabilities.

On Codeforces, OpenAI o1-1217 leads with 96.6%, while DeepSeek-R1 achieves 96.3%. This benchmark evaluates coding and algorithmic reasoning capabilities.

For SWE-bench Verified, DeepSeek-R1 scores 49.2%, slightly ahead of OpenAI o1-1217's 48.9%. This benchmark focuses on software engineering tasks and verification.

General Knowledge Benchmarks

One noticeable difference in the models is their general knowledge strengths.

On GPQA Diamond, OpenAI o1-1217 leads with 75.7%, while DeepSeek-R1 scores 71.5%. This measures the model’s ability to answer general-purpose knowledge questions.

For MMLU, OpenAI o1-1217 slightly outperforms DeepSeek-R1 with 91.8% versus 90.8%. This benchmark evaluates multitask language understanding.

DeepSeek-R1 is a worthy OpenAI competitor, specifically in reasoning-focused AI. While OpenAI's o1 maintains a slight edge in coding and factual reasoning tasks, DeepSeek-R1's open-source access and low costs are appealing to users.

DeepSeek-R1 Pricing & Costs

According to the reports, DeepSeek's cost to train its latest R1 model was just $5.58 million.

This figure is significantly lower than the hundreds of millions (or billions) American tech giants spent creating alternative LLMs.

For instance, it's reported that OpenAI spent between $80 to $100 million on GPT-4 training.

OpenAI's CEO, Sam Altman, has also stated that the cost was over $100 million. However, it's worth noting that this likely includes additional expenses beyond training, such as research, data acquisition, and salaries.

The other noticeable difference in costs is the pricing for each model. While DeepSeek is currently free to use and ChatGPT does offer a free plan, API access comes with a cost.

Below is a comparison of what users can expect to spend based on usage:

| Model | Context Length | Max CoT Tokens | Max Output Tokens | 1M Tokens Input Price (Cache Hit) | 1M Tokens Input Price (Cache Miss) | 1M Tokens Output Price |

| deepseek-chat | 64K | - | 8K | $0.07 | $0.27 | $1.10 |

| deepseek-reasoner | 64K | 32K | 8K | $0.14 | $0.55 | $2.19 |

| gpt-4o | 128K | - | - | $1.25 | $2.50 | $10.00 |

DeepSeek's pricing is significantly lower across the board, with input and output costs a fraction of what OpenAI charges for GPT-4o.

While GPT-4o can support a much larger context length, the cost to process the input is 8.92 times higher.

How to Access DeepSeek

Rate limits and restricted signups are making it hard for people to access DeepSeek. Fortunately, there are three primary ways to get started:

- DeepSeek’s web-based platform

- DeepSeek API

- DeepSeek mobile app

DeepSeek Web Access

The most straightforward way to access DeepSeek chat is through their web interface. Visit their homepage and click "Start Now" or go directly to the chat page.

On the chat page, you’ll be prompted to sign in or create an account.

After signing up, you can access the full chat interface. Users can select the “DeepThink” feature before submitting a query to get results using Deepseek-R1’s reasoning capabilities.

DeepSeek API

DeepSeek offers programmatic access to its R1 model through an API that allows developers to integrate advanced AI capabilities into their applications.

To get started with the DeepSeek API, you'll need to register on the DeepSeek Platform and obtain an API key.

For detailed instructions on how to use the API, including authentication, making requests, and handling responses, you can refer to DeepSeek's API documentation.

DeepSeek Mobile App

DeepSeek is available on both iOS and Android platforms.

Simply search for "DeepSeek" in your device's app store, install the app, and follow the on-screen prompts to create an account or sign in.

Will DeepSeek Get Banned In The US?

As the TikTok ban looms in the United States, this is always a question worth asking about a new Chinese company.

White House Press Secretary Karoline Leavitt recently confirmed that the National Security Council is investigating whether DeepSeek poses a potential national security threat.

However, there is no indication that DeepSeek will face a ban in the US. President Donald Trump has called DeepSeek's breakthrough a "wake-up call" for the American tech industry.

We’ll likely see more app-related restrictions in the future. For instance, the U.S. Navy banned its personnel from using DeepSeek's applications due to security and ethical concerns and uncertainties.

Despite these concerns, banning DeepSeek could be challenging because it is open-source. While platforms could restrict the model app, removing it from platforms like GitHub is unlikely.

Is DeepSeek Better Than ChatGPT?

The AI space is arguably the fastest-growing industry right now. And DeepSeek's rise has certainly caught the attention of the global tech industry.

While it's tempting to label DeepSeek as the new ChatGPT alternative, the reality is more nuanced.

DeepSeek’s performance in various benchmarks, particularly in coding and mathematical reasoning, is on par with GPT-4o's capabilities.

That, along with the cost-effectiveness of DeepSeek's API, is a significant draw for developers and businesses looking to integrate AI capabilities into their products.

However, skeptics in the AI space believe we are not being told the whole story about DeepSeek’s training costs and GPU usage.

It’s far too early to remove ChatGPT’s crown as the king of chatbots, but DeepSeek's rapid progress serves as a reminder of what's possible in this field.

Stop Guessing, Start Growing 🚀

Use real-time topic data to create content that resonates and brings results.

Share

Newsletter Signup

By clicking “Subscribe” you agree to Semrush Privacy Policy and consent to Semrush using your contact data for newsletter purposes

Written By

Anthony is a Content Writer at Exploding Topics. Before joining the team, Anthony spent over four years managing content strat... Read more