AI healthcare booms as 7 in 10 US healthcare leaders expect improved profits in 2025

A report from Deloitte has found that 71% of healthcare industry leaders expect improved profitability in 2025. This projection comes as AI technology drives significant transformation in the sector.

The survey found that 59% of executives have an overall positive industry outlook for 2025, while 69% expect operating revenue to increase. However, there are challenges to overcome.

Healthcare affordability is a hot topic in the US, and industry leaders expect that to be an overarching theme this year. Improving consumer experience, engagement, and trust is considered “very important” by more than half of those surveyed.

In this regard, the healthcare industry will need to meet concerns about AI head-on. A separate Deloitte study found that consumer distrust in AI-generated health information rose among all age groups in 2024, and 41% of physicians have concerns about the impact of the technology on patient privacy.

Even so, there’s no doubt that AI has the potential to bring major improvements to healthcare, both in terms of patient experience and company profits. From precision medicine to drug discovery and robotic surgery, here’s how the industry is evolving.

Efficient AI as a driver of healthcare profits

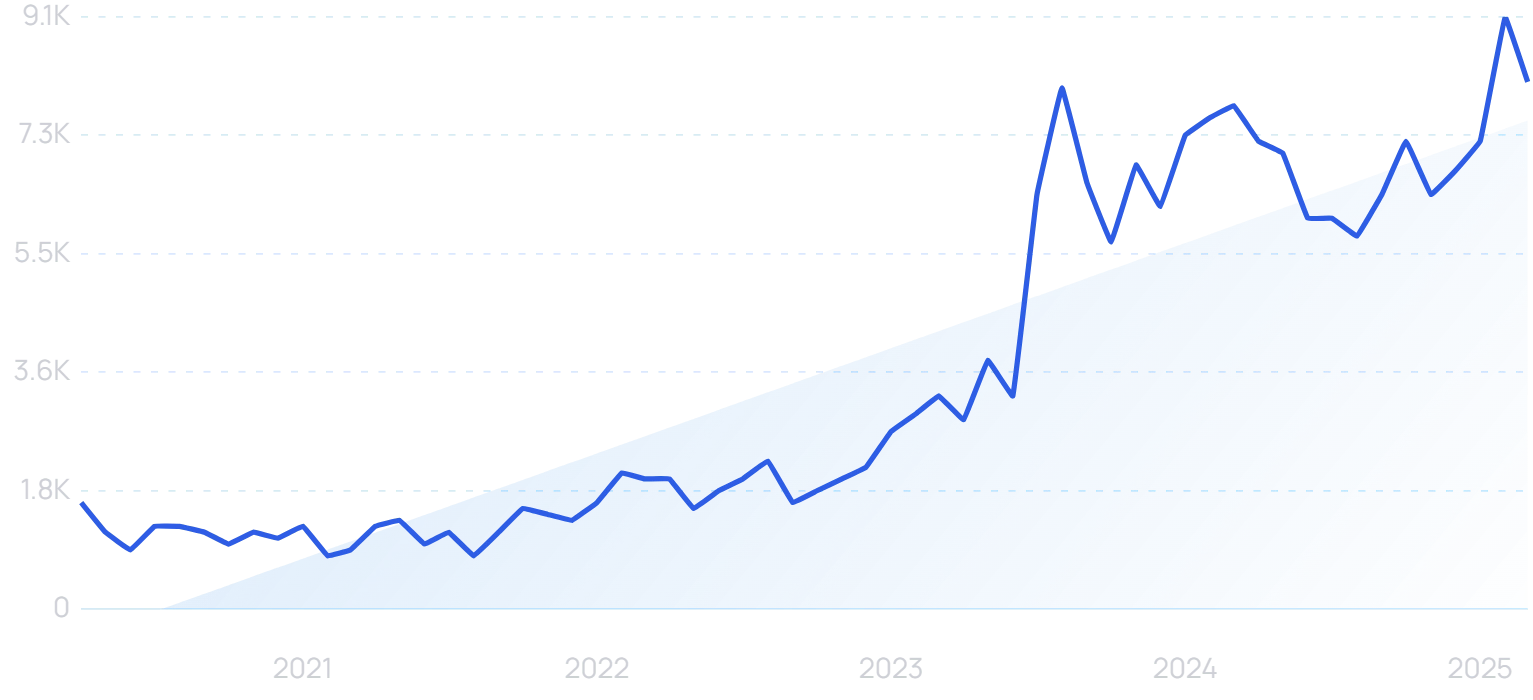

Just like in other industries, the future role of AI in healthcare is to simplify existing processes. A paper in the National Bureau of Economic Research estimates that wider adoption of AI in the US healthcare system could generate annual savings of $200 billion to $360 billion.

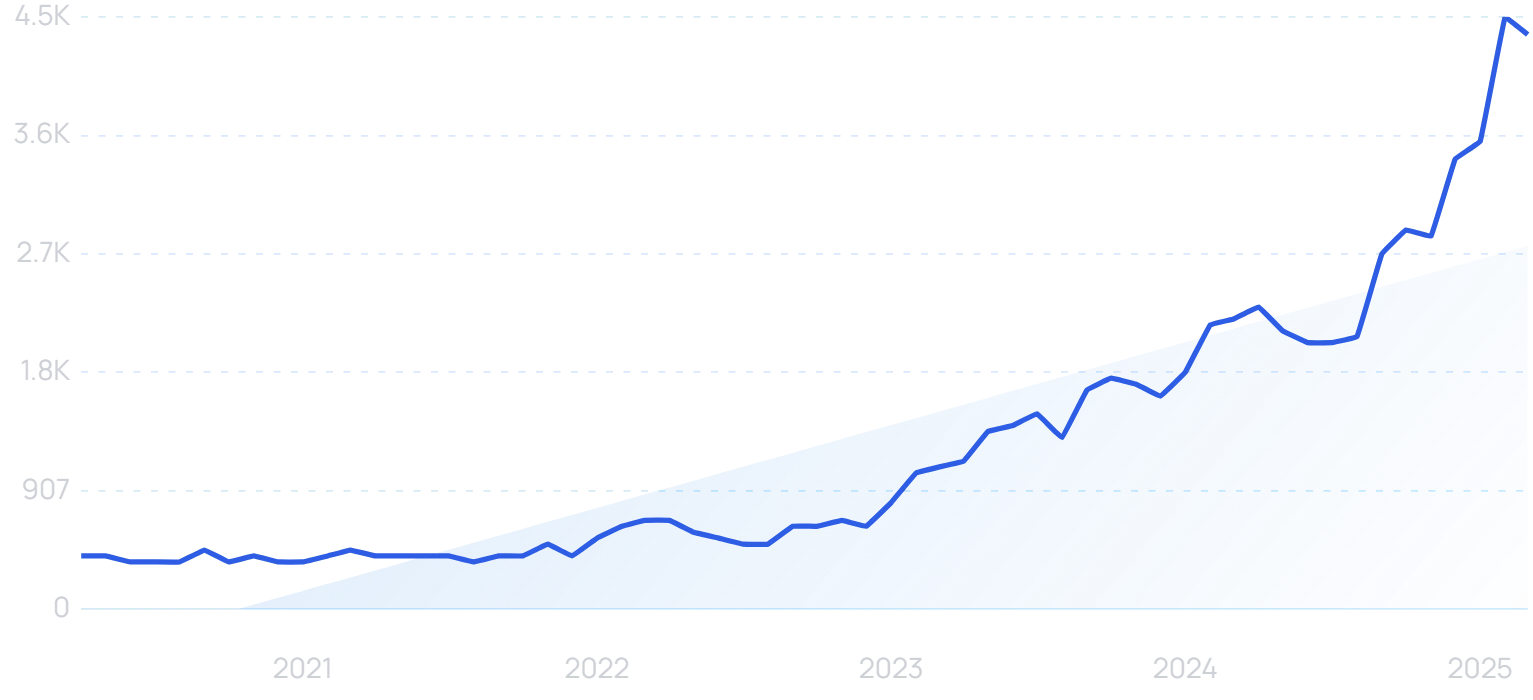

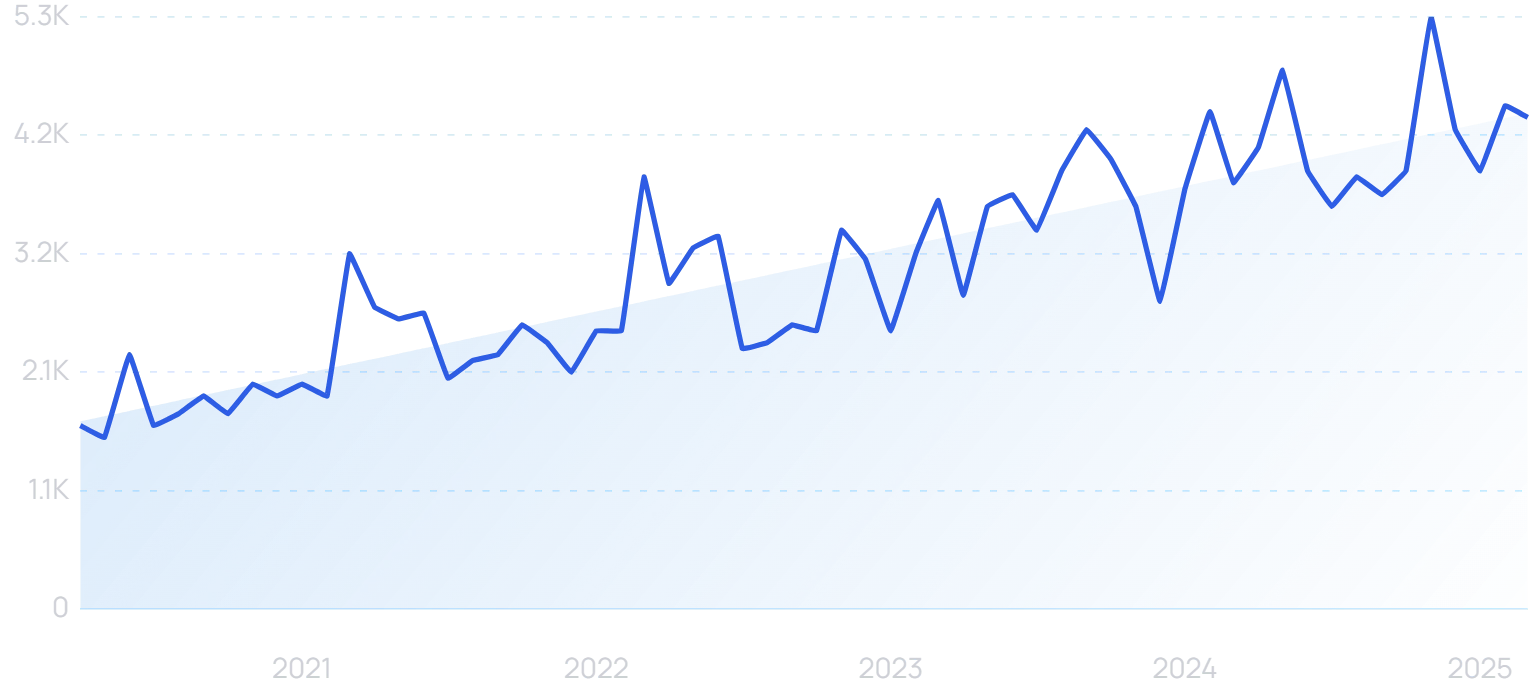

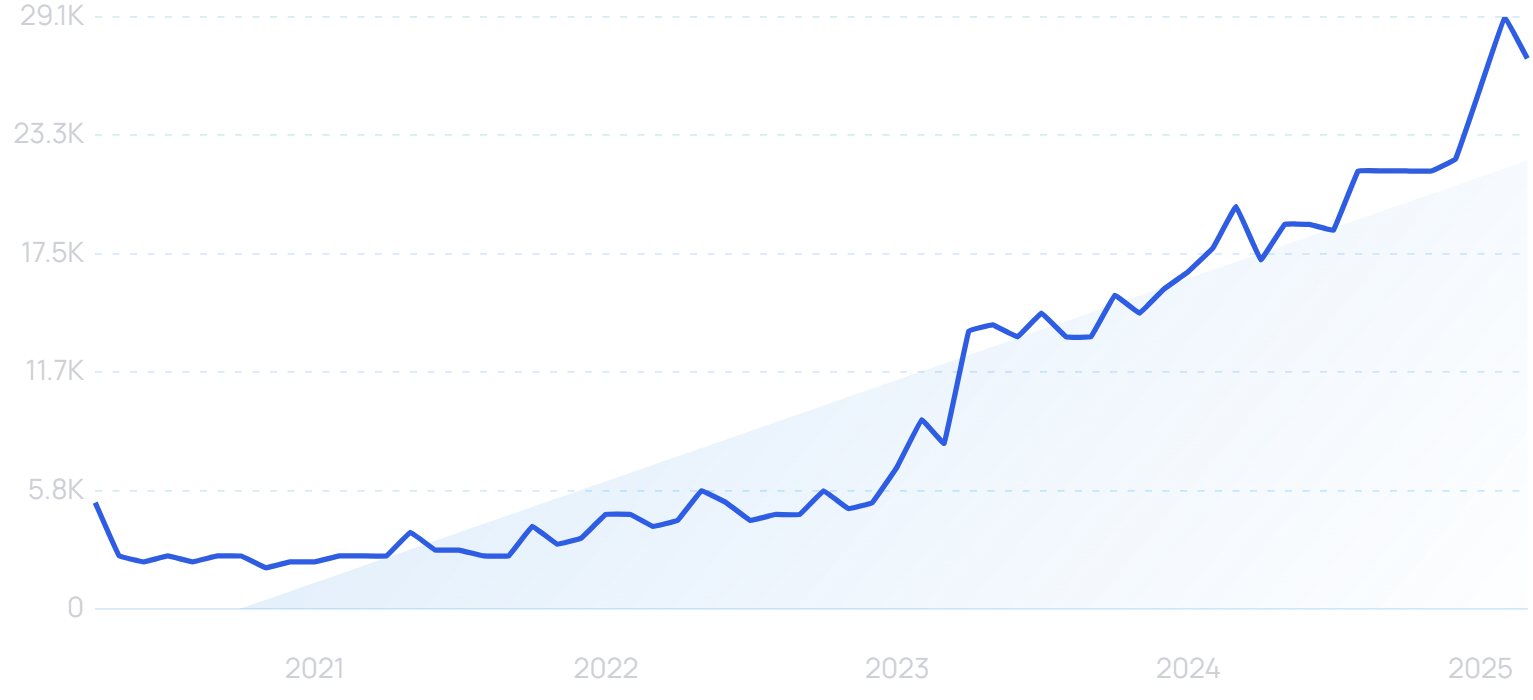

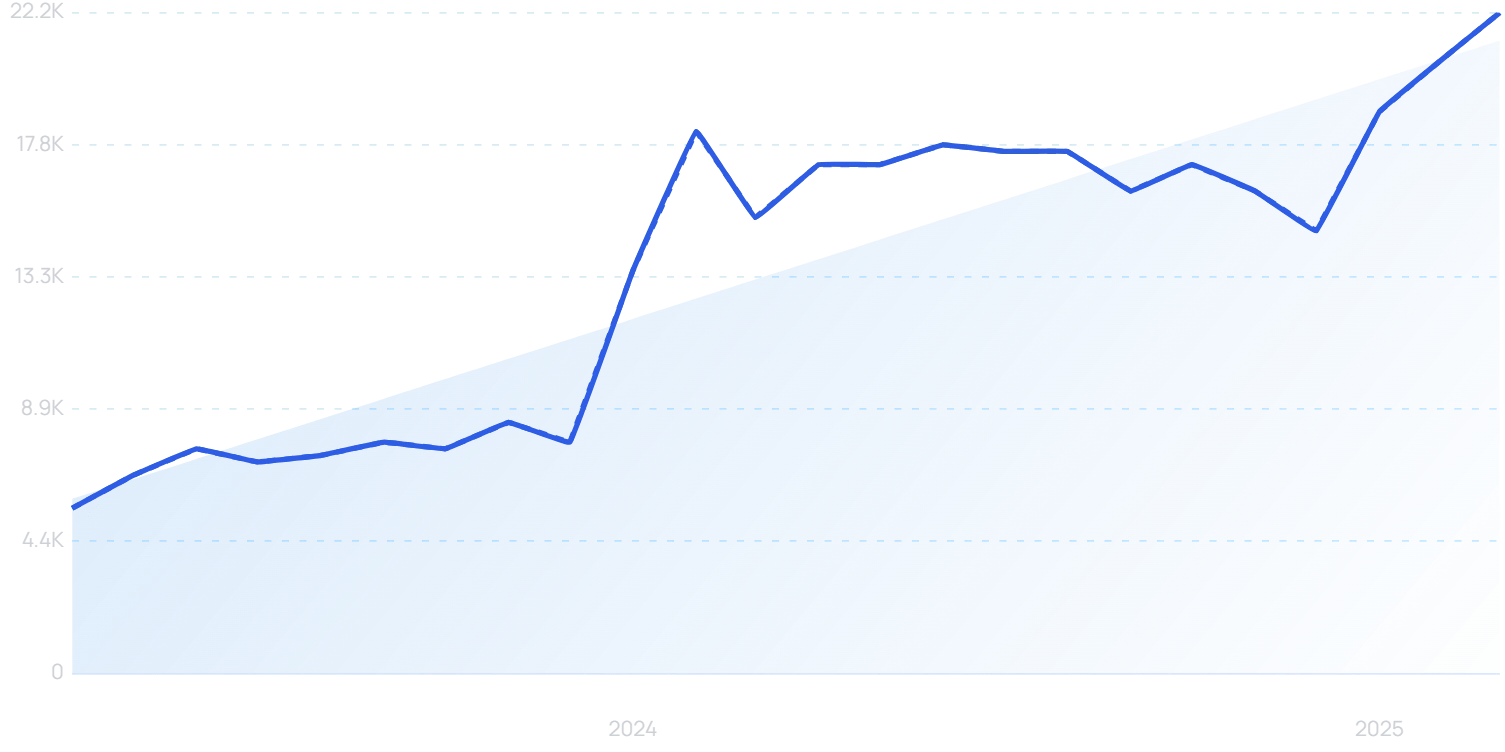

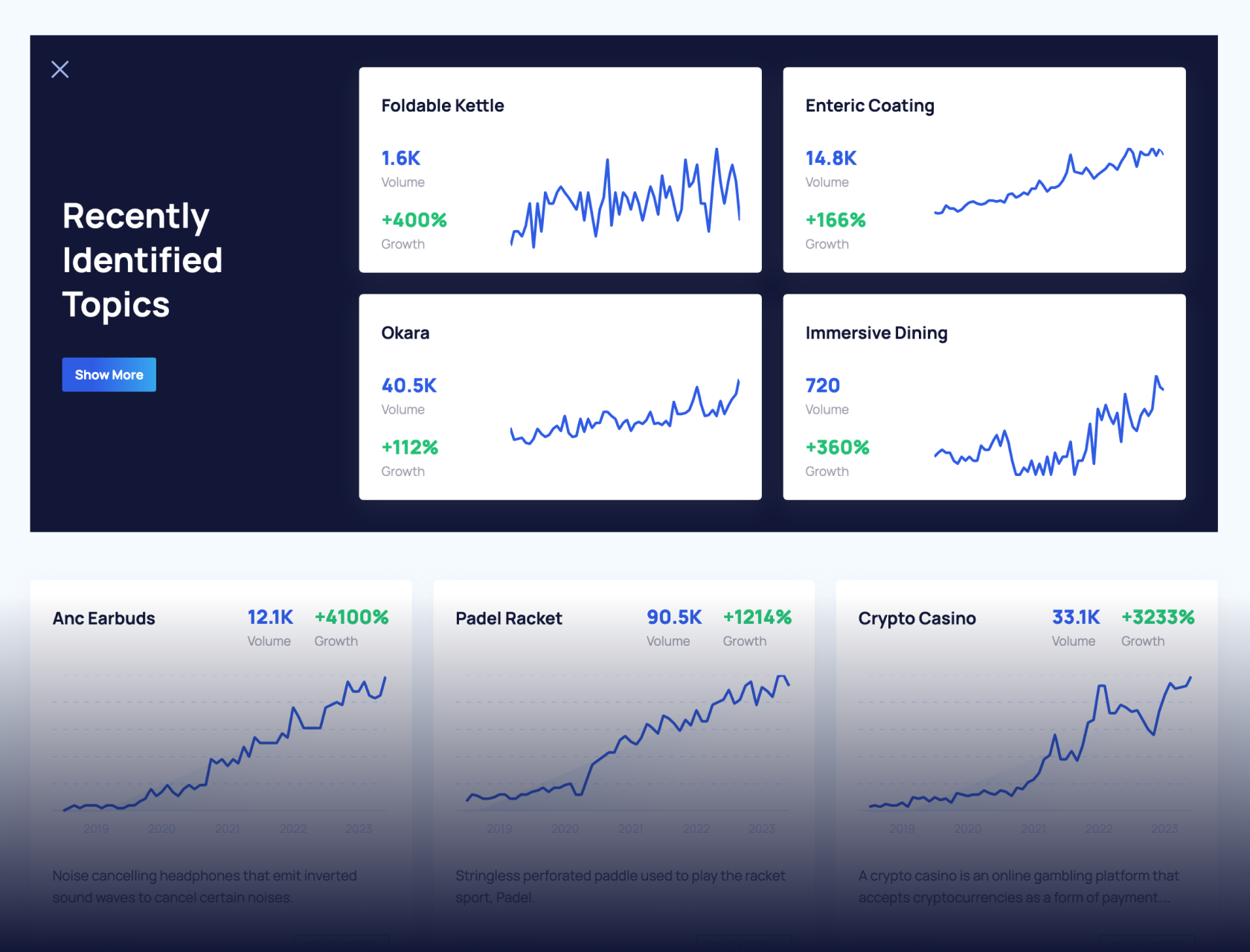

“Healthcare AI” searches are up 978% in the last 5 years.

Studies from the University of Oxford have found that no single healthcare occupation can be entirely automated — and that even in areas with high automation potential, automation desirability is often lower. Human contact remains a critical component of primary care.

Instead, AI is well-placed to significantly improve the efficiency of the jobs already being done by caregivers. Goldman Sachs estimates that 28% of work tasks performed by healthcare technicians and practitioners in the US could be automated.

And 92% of healthcare leaders believe automating repetitive tasks is crucial for addressing staff shortages.

As a result, the global healthcare automation market is projected to be worth $119.5 billion by 2033.

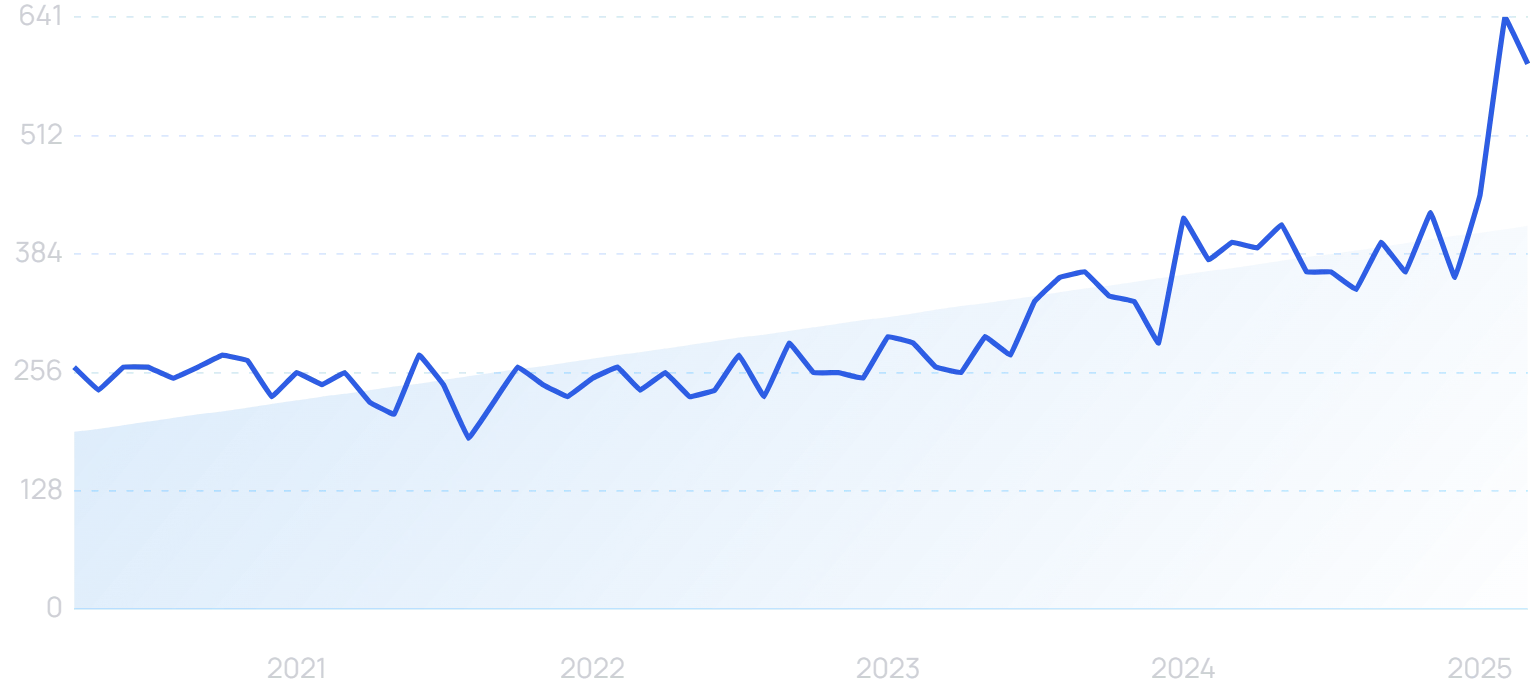

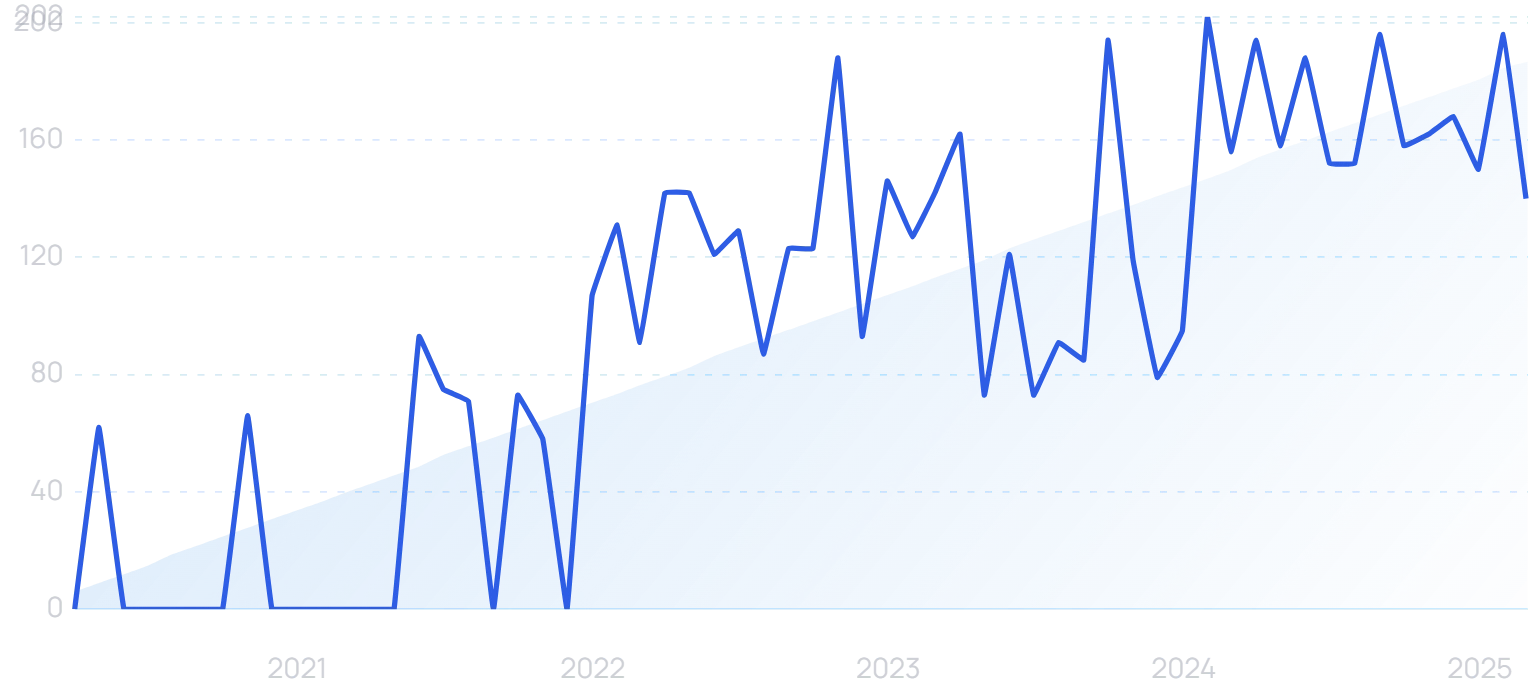

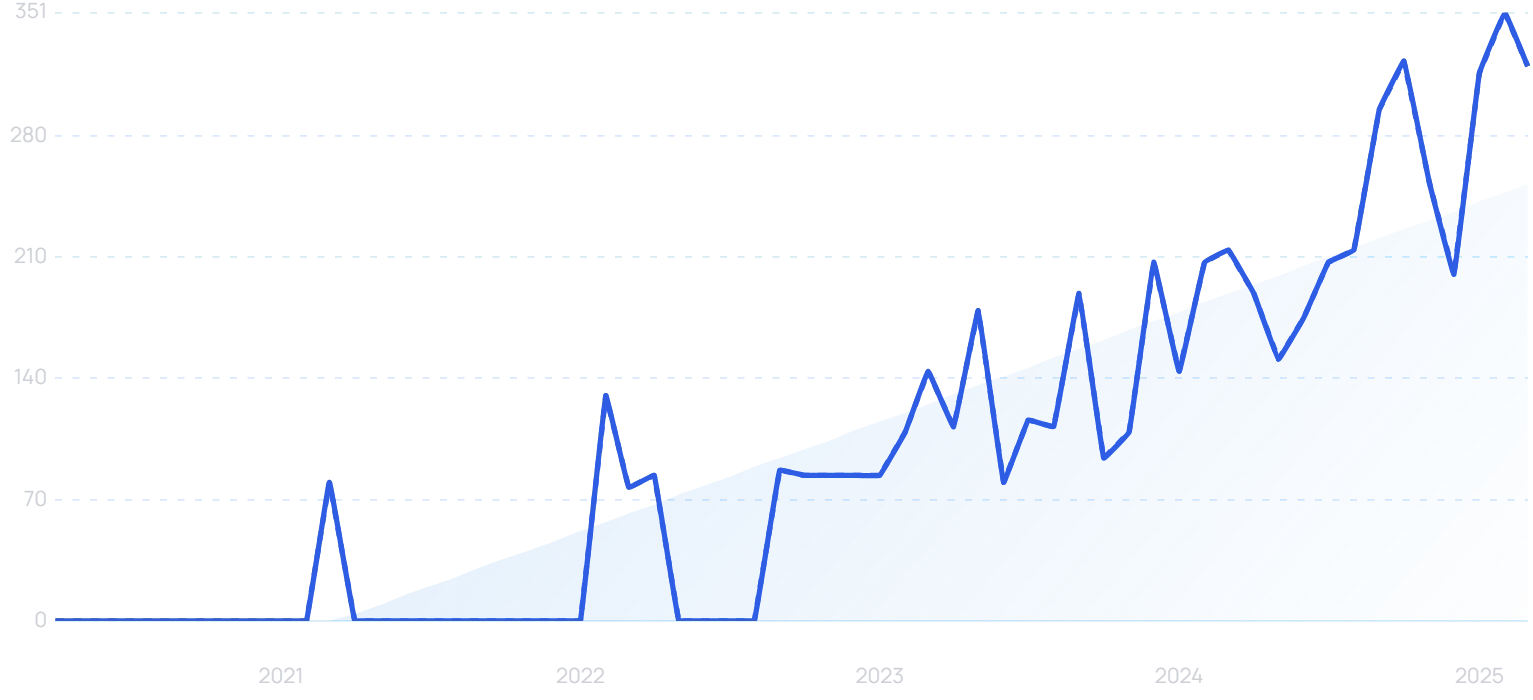

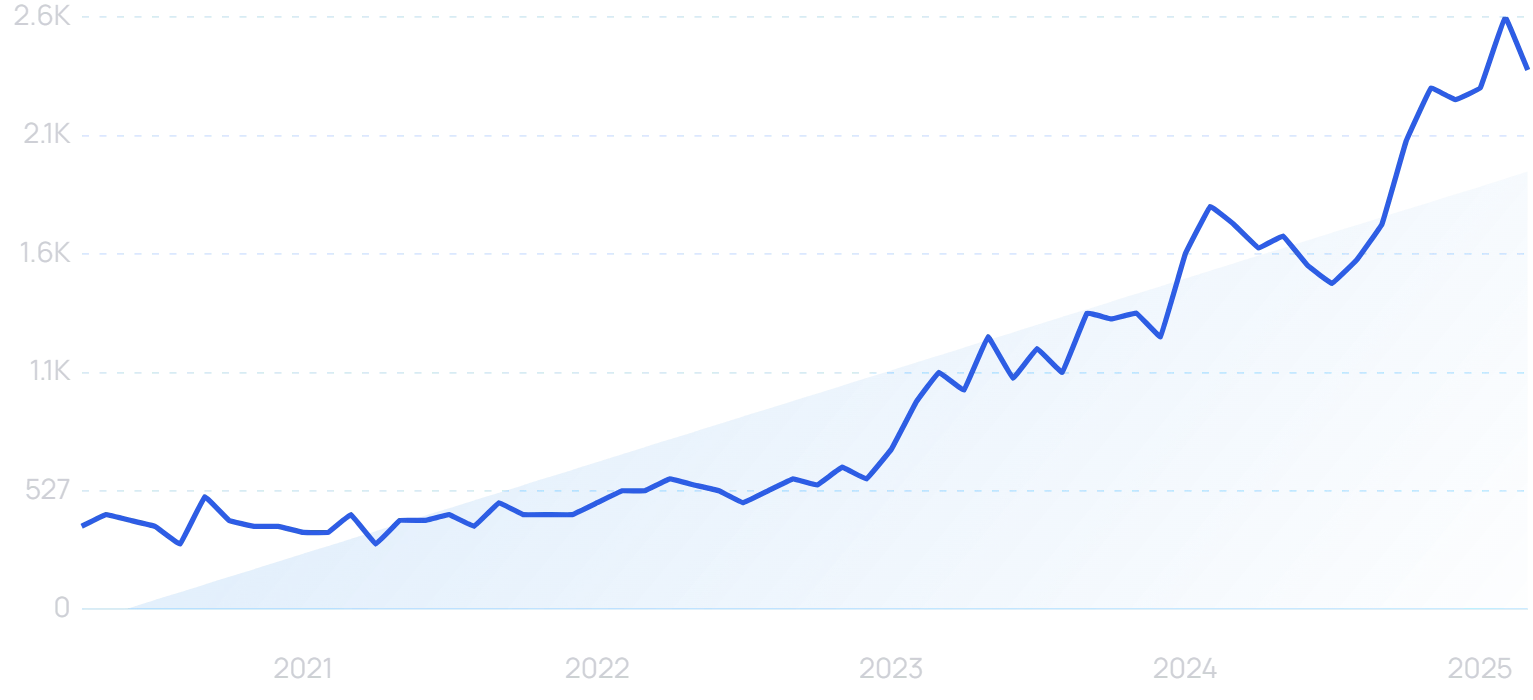

“Healthcare automation” searches are up 124% in the last 5 years.

Practitioners in critical care, internal medicine, neurology, and oncology spend an average of 18 hours a week on paperwork and administrative tasks. Using AI to automate some or all of that work would free up a significant amount of extra time to see more patients.

More than half of health leaders say their organizations have already implemented automation in clinical data entry, with a further 33% planning to do so within the next three years.

And such is the rush to offer automation solutions to the industry, “healthcare AI” is now considered a hard keyword to rank for, requiring 276 high-authority referring domains and well-optimized content.

Build a winning strategy

Get a complete view of your competitors to anticipate trends and lead your market

Investment flows into healthcare AI

Investors poured $56 billion into generative AI firms in 2023-24. In fact, AI startups claimed approximately 35% of all startup funding last year.

The healthcare sector has felt some of that benefit. Total venture investment in the industry grew by 17% in 2024, fueled by some big bets on healthcare AI.

In January 2025, Qventus raised a $105 million Series D round to develop “AI teammates” for medical personnel. Its technology seeks to reduce staff workloads through effective automation.

“Qventus” searches are up 31% in the last 5 years.

Through simplification and automation of scheduling, Qventus says it can utilize more than 50% of released operating room (OR) time blocks that may otherwise go unused. In total, it can unlock up to 9% more primetime OR capacity.

In March, Navina raised a $55 million Series C for its AI-enabled clinical intelligence platform aimed at primary care physicians. Goldman Sachs Alternatives led the round.

Innovaccer has also raised funds in 2025, completing a $275 million Series F. Its AI clears up patient data to harvest better insights, as well as saving clinicians “hours a day” by automating documentation.

AI unlocks precision medicine

While scheduling, note-taking and the less glamorous side of AI might have the most immediate impact on the bottom line for healthcare companies, there are also some exciting industry-specific applications of the technology that could ultimately change the landscape in more radical ways.

In particular, AI is already starting to unlock advances in precision medicine.

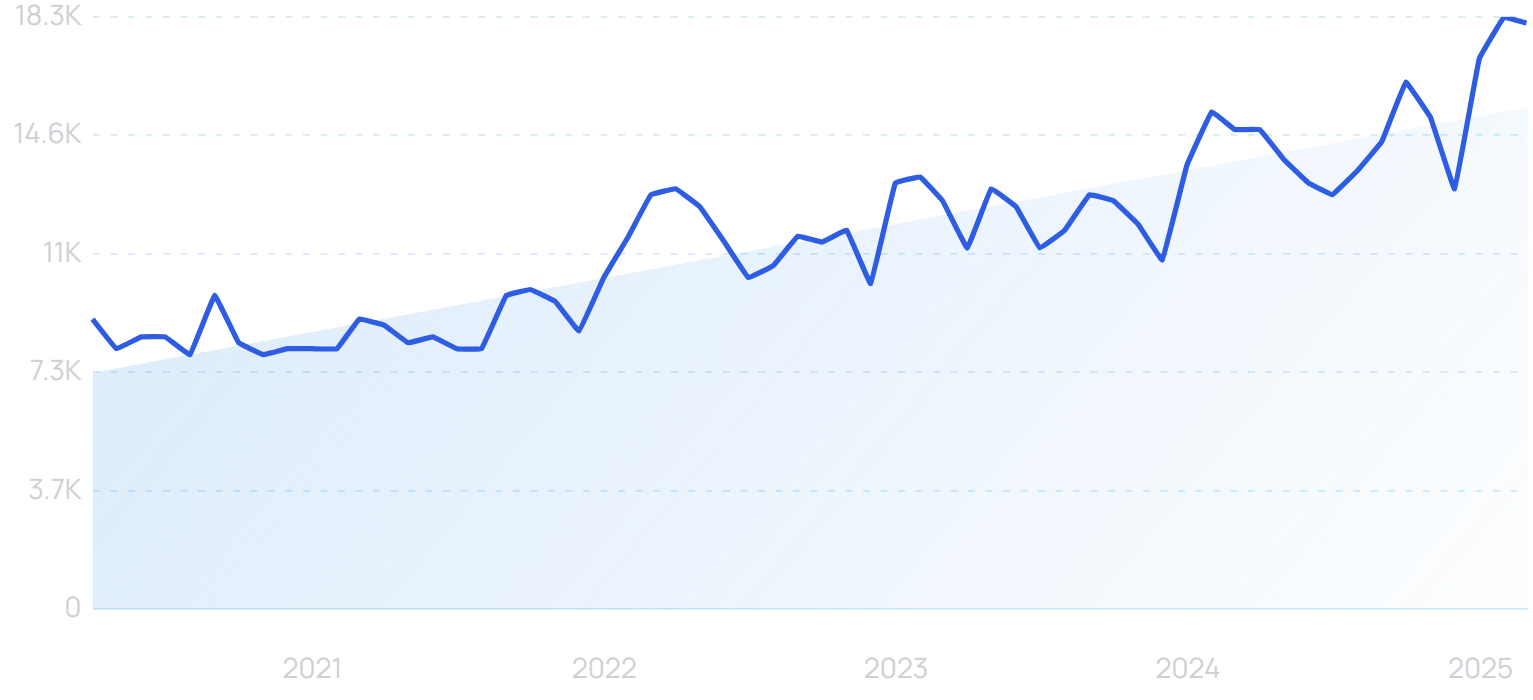

Searches for “precision medicine” are up 102% in the last 5 years.

Precision medicine involves treating patients based on their unique characteristics. While treatment plans have always been somewhat individualized, AI allows practitioners to be far more granular.

AI can process entire medical histories far more quickly and easily than a doctor or nurse. 97% of healthcare data currently goes unused, but digital transformation is beginning to harness this mine of information.

There’s the potential hurdle of what’s known as “healthcare interoperability”. This refers to the ability to access and share data between departments and organizations.

It’s a necessary step in using the full power of AI, but one which requires a certain amount of restructuring, as well as safeguards regarding patient privacy.

But in theory, AI can be used to quickly generate an extremely thorough patient profile. It can even draw from the “Internet of Medical Things” (IoMT), incorporating data from connected devices like smartwatches.

“IoMT” searches have risen by 173% in the last 5 years.

Digital twins

Using all this data, AI can help to create a “digital twin” of a patient. This is a virtual recreation of the physical consumer.

Digital twins are highly useful for running simulations and calculating optimal treatment paths. For instance, they can help to ascertain ideal dosages and timing of medicines.

Healthcare digital twin” searches are still low, but are trending upward over the long term.

At Johns Hopkins, digital twins of patient hearts are being used to predict arrhythmias and adjust treatment accordingly. Simulated electrical waves are sent through the digital twin, allowing practitioners to see if they interact unusually with any scarring or damage — which can then be removed in real life.

These advances produce better medical outcomes. But they can also help to strengthen the trust between patients and healthcare providers.

The Deloitte study found that 43% of consumers are now using connected monitoring devices. If this data is taken on board and used to help devise a treatment plan, patients will feel as though they have been heard.

That’s a major challenge for the industry. In one study, 46% of patients reported “never or rarely” being asked for self-assessments of their conditions, which one participant described as “degrading and dehumanizing”.

AI transforms drug trials

Digital twins can be used in another medical context to radically change the process of drug trials. With AI helping to create a significant body of digital patient replicas, there’s a strong case for running simulations of new medicines on these virtual counterparts.

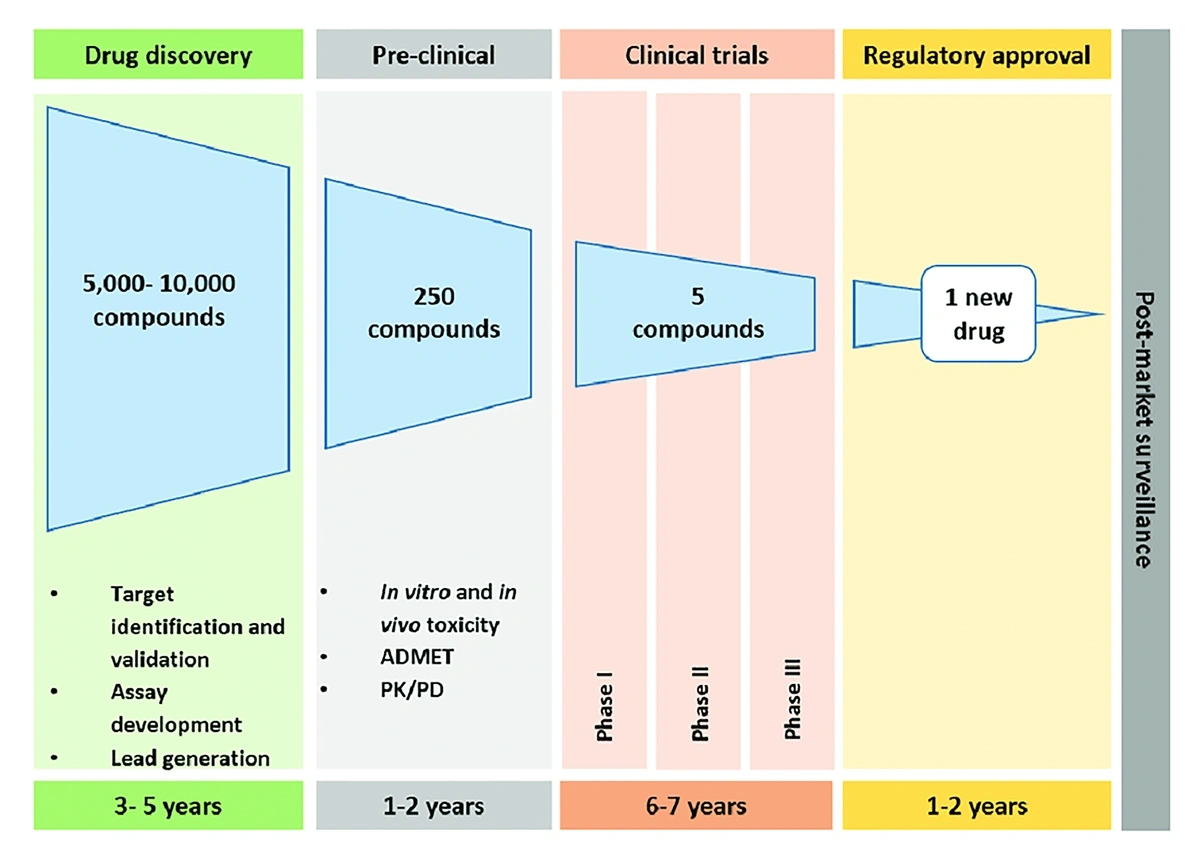

Most obviously, this removes the risks of adverse effects encountered in physical drug trials. But it also speeds up the process by orders of magnitude, with the timeline from initial discovery to full approval currently often 10 years or more.

One study placed the average drug approval pipeline at approximately 15 years.

Additionally, in classic trials, a drug could fail because the average patient response falls short of the trial’s target. By using digital twins, it is easier to isolate groups of patients where a drug is working particularly well, providing alternative routes to commercial and medical viability.

Drug companies are still testing on humans in the latter stages of development, and that’s unlikely to change any time soon. But Sanofi is using digital twins to effectively “skip” from a Phase 1 study to a Phase 2b study, with virtual patients used to establish optimal dosage.

AI drug discovery

Even before reaching the trial stage, AI has a massive role to play in drug development.

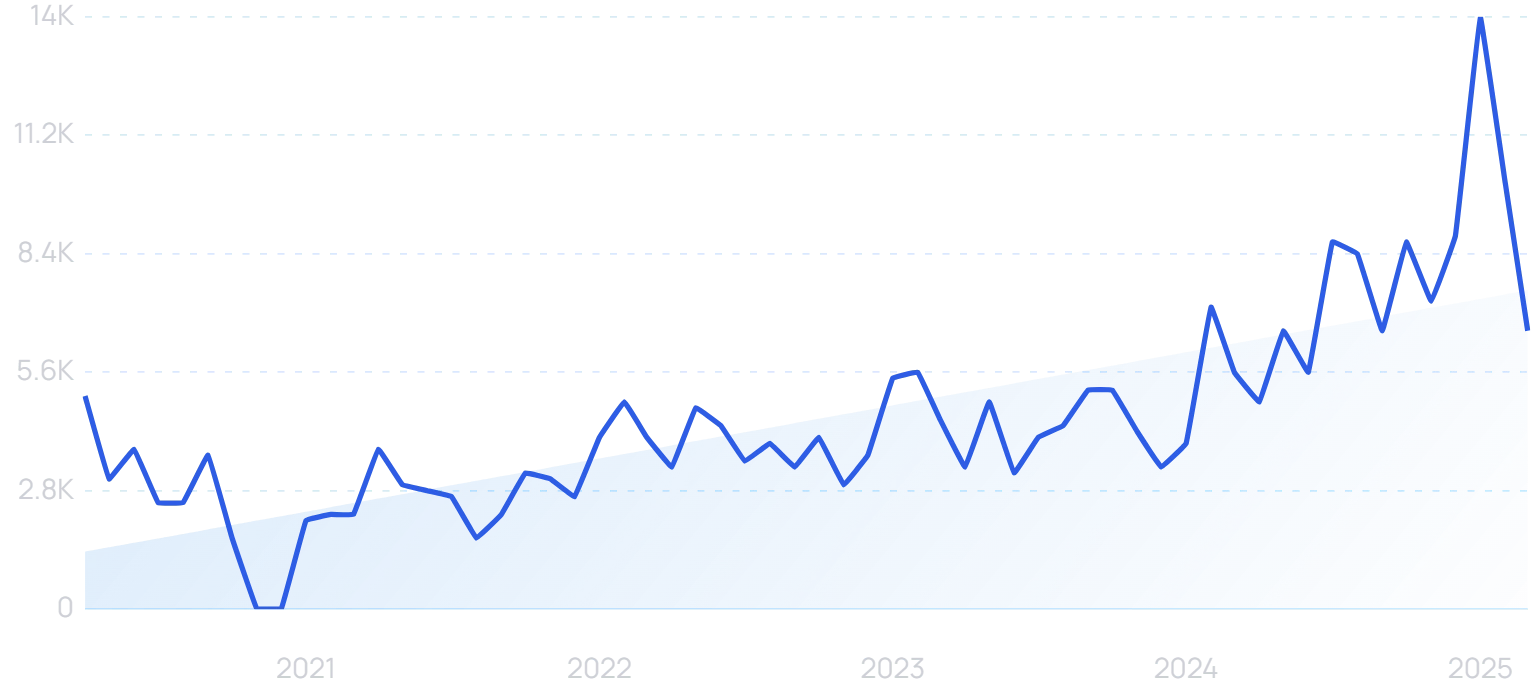

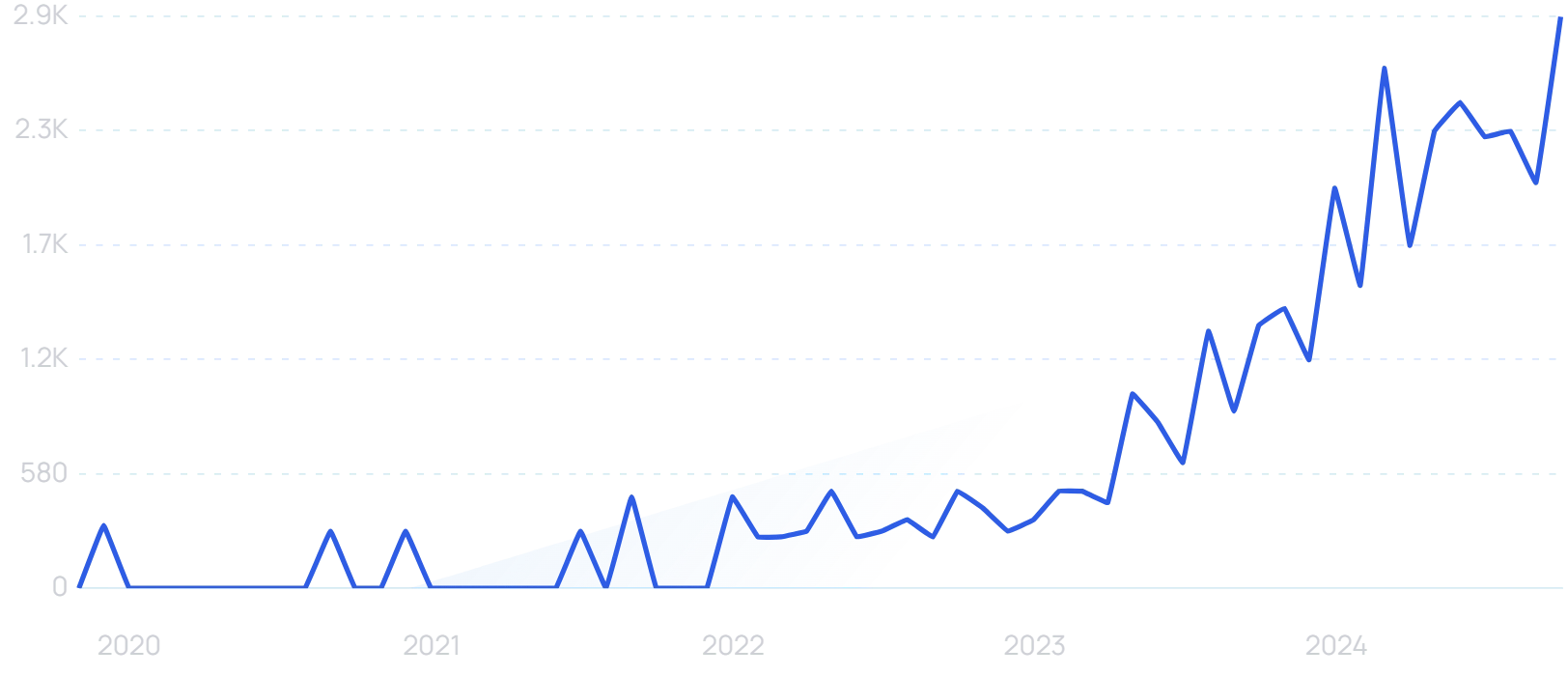

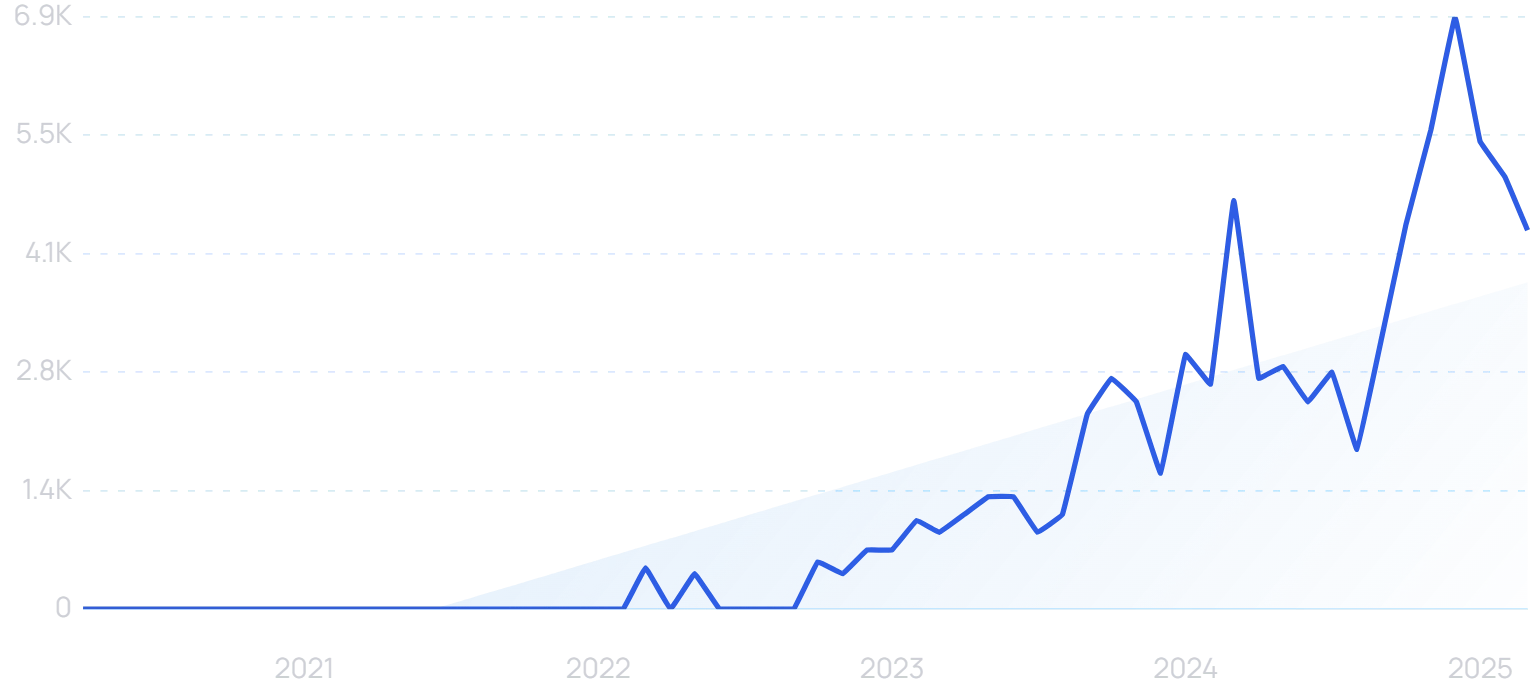

“AI drug discovery” searches are up 394% in the last 5 years.

AI can drastically enhance the drug discovery process. Trained on a vast array of relevant data, machine learning models can predict at a far earlier point which medicines are worth taking to the trial stage.

As well as acting as an early filter, ever-more advanced AI can also pose entirely novel drug suggestions.

Last year, 46 “AI-discovered” drugs reached phase 2 and 3 clinical trials. In total, at least 75 “AI-discovered molecules” have entered clinical trials.

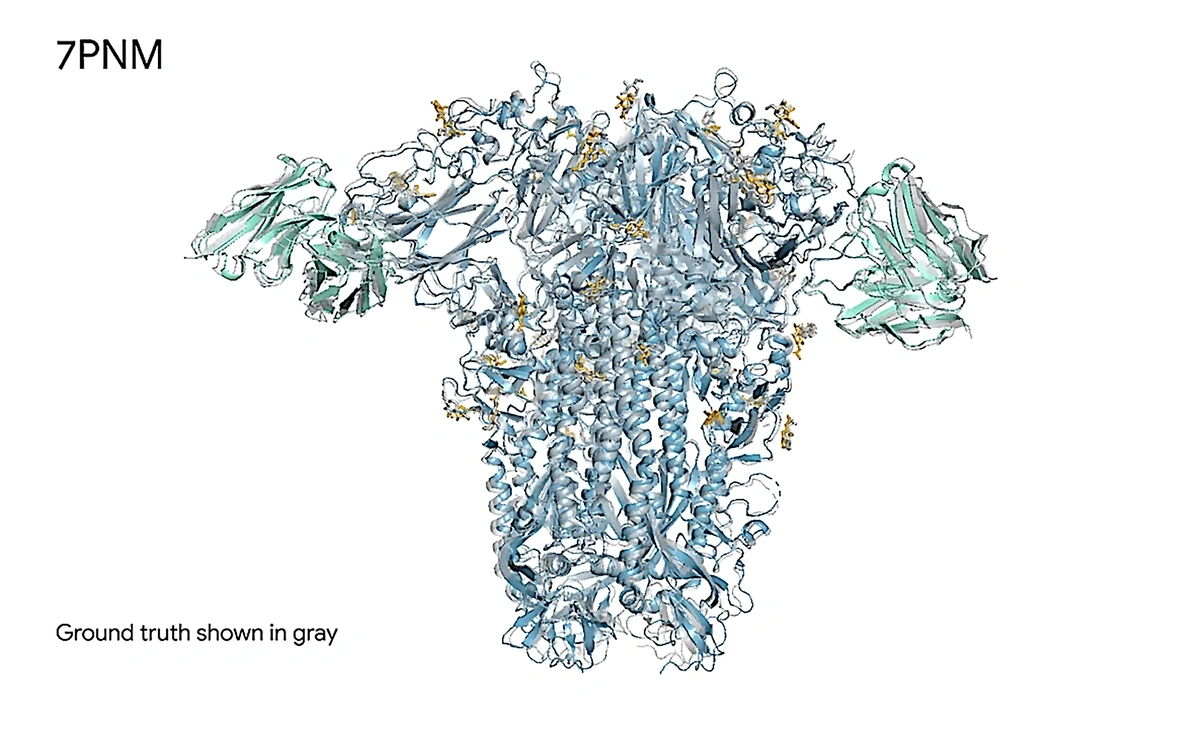

Google DeepMind and Isomorphic Labs have collaborated on AlphaFold 3. Drawing on a vast protein data bank, the AI model can accurately predict how combinations of these substances will interact on a molecular level.

AlphaFold 3 correctly predicted the true structure of a cold virus spike protein interacting with antibodies and simple sugars.

Isomorphic Labs is itself a drug discovery startup. And they and Google have since partnered with other pharmaceutical companies to make the AlphaFold 3 model available in drug development.

Deals with Eli Lilly and Novartis could be worth up to $2.9 billion, depending on the achievement of future milestones.

Using their own AI models, Insilico Medicine has taken a treatment for idiopathic pulmonary fibrosis to clinical trials within 18 months. The process would usually be expected to take 4 years.

Patient-Facing AI

AI is clearly already having a real impact on healthcare behind the scenes. But will patient-facing artificial intelligence ever become the norm?

There is already a vast array of “AI doctors” online.

“AI doctor” searches are up 417% in the last 5 years

In one study, ChatGPT-4 scored an average of 90% when trying to diagnose a condition from a case report. Doctors acting alone scored 74%, while doctors aided by AI scored 76%.

Remarkably, another recent study even found that patients rated AI far higher than human doctors in terms of the empathy of their responses. ChatGPT was rated “empathetic” or “very empathetic” 45% of the time, compared to just 4.6% for physicians.

Even so, it seems unlikely that AI will be used this way in a clinical, institutional setting any time soon.

AI robotic surgery

When discussing front-line medical automation, robotic surgery is never too far from the conversation. AI is impacting the landscape here as well.

“AI robotic surgery” searches are trending upward.

The “traditional” concept of robotic surgery is better referred to as robot-assisted surgery, with trained human surgeons guiding robotic arms to complete operations with greater precision. But what if the robotic equipment came equipped with AI?

Believe it or not, that is a question being asked in medical circles. A Johns Hopkins and Stanford University study trained a robot on hours of videos of skilled surgeons, after which it was able to execute the same surgical procedures with equal skill levels.

A senior author on the paper described it as a “significant step forward toward a new frontier in medical robotics”.

Robots are already used in one form or another in 4 million surgeries per year. The human role may well trend gradually from control to oversight.

As with the idea of AI primary care physicians, however, AI robotic surgery would need to become both more trustworthy and more trusted before becoming genuinely commonplace.

The need for trust

We’ve seen how AI can actually be a driver of trust when used to pursue more personalized, patient-driven precision medicine. But at least for now, the US public does not trust AI to play a front-and-center role in a healthcare setting.

According to one of the Deloitte studies, 30% of consumers do not trust information provided by generative AI. 80% would want to be informed if a doctor was using AI in the medical decision-making process.

And the second Deloitte report was clear about the crisis of trust facing the healthcare industry. It’s an even higher priority for industry leaders than consumer affordability challenges.

Before AI can be widely used in any kind of patient-facing setting, healthcare bodies therefore need to ensure they have very robust AI governance frameworks.

Searches for “AI governance framework” are up 7000% in the last 5 years.

An AI governance framework sets parameters for how AI will be developed and deployed within an organization. This is of vital importance in the medical profession, where there are questions of accountability to consider in the event of an AI misdiagnosis.

The World Health Organization has officially called for caution in the deployment of AI technology. It cites a list of concerns, including:

- The potential for bias in training data

- The risk of using private patient data to train LLMs

- The danger of convincing but medically inaccurate AI responses

The need for accuracy

Clearly, the need for accuracy in a medical setting is paramount. And while AI has beaten doctors in certain studies, models like ChatGPT currently remain susceptible to “hallucinating” plausible-looking but inaccurate information.

“AI hallucination” searches are up 285% in the last 2 years.

Human doctors might make a misdiagnosis. But these hallucinations introduce an entirely new class of potential error into the diagnostic process.

A National Institutes of Health study found that even when an AI model made the right final choice, it would often incorrectly describe medical images and give flawed reasoning behind a diagnosis. When allowed to consult external resources, physicians also diagnosed more accurately than the AI, especially on questions ranked most difficult.

AI needs to be more reliably accurate before it can be used on the front line in this manner. And perhaps even more critically, consumer trust in the technology’s accuracy needs to be built before such use cases are viable.

“Low-grade” clinical decisions

There are some clinical decisions AI can already make in its current form. In particular, the technology is proving useful in decisions one step removed from diagnostics.

A study at the University of California found that ChatGPT successfully identified the more urgent case in 88% of triage scenarios. Physicians made the right call 86% of the time.

The study noted that more tests would be needed before AI could be deployed responsibly in this manner in an emergency department setting. But across the healthcare industry as a whole, there is a definite movement toward automation of task prioritization.

36% of healthcare leaders have already implemented automation for the prioritization of clinical workflows. 41% plan to do so in the next three years, making it the largest single area of planned healthcare automation.

AIDOC has rolled out AI triaging software in over 1000 medical centers, including 7 of the 10 top-ranked US hospitals. Its software analyzes 3 million patients every month.

AIDOC’s AI triaging is already in use worldwide.

Away from triage settings, AI is already being used extensively to help analyze medical scans.

Of the 950 AI-enabled medical devices approved by the FDA, over 700 relate to radiology.

“AI radiology” searches are up 550% in the last 5 years.

Spectral AI is among the organizations working to apply AI to medical imaging. It specializes in scanning wounds.

Its DeepView technology predicts how wounds will heal, using scan information not visible to the human eye. Doctors can then use that information to inform treatment plans.

“Spectral AI” searches have grown 6300% in the last 5 years.

Spectral has received more than $7 million in US government funding.

Healthcare leaders must invest in AI to drive growth

I believe healthcare leaders are right to be optimistic about the outlook for the industry in 2025. But to reach these favorable outcomes, they must invest.

AI is here to stay, and its use cases will only increase as the technology becomes more sophisticated. In terms of immediate applications, there’s a vast amount of administrative automation still to be done.

As for specifically medical applications of AI, there are areas where the healthcare industry can already make use of the technology, notably in precision medicine, drug discovery, triage, and medical imaging.

And perhaps most importantly of all, the healthcare industry must work to build trust with patients. The growing use of AI within the sector makes this even more vital, and even more challenging — but when used responsibly, the technology can help to reinforce trust rather than undermine it.

Stop Guessing, Start Growing 🚀

Use real-time topic data to create content that resonates and brings results.

Share

Newsletter Signup

By clicking “Subscribe” you agree to Semrush Privacy Policy and consent to Semrush using your contact data for newsletter purposes

Written By

James is a Journalist at Exploding Topics. After graduating from the University of Oxford with a degree in Law, he completed a... Read more